Your cart is currently empty!

Google Cloud Vision API is a powerful tool that allows developers to easily integrate advanced image and video analysis into their applications. This API can detect and extract information from images and videos, including text, objects, and even emotions.

One of the key features of the Google Cloud Vision API is its ability to detect faces in images. This can be incredibly useful for a variety of applications, such as security systems or photo tagging. The API can not only detect the presence of a face, but also analyze the emotions on the face, such as joy, anger, sorrow, and surprise.

For example, imagine you are building a social media app and want to automatically tag users in photos. By using the Google Cloud Vision API, you can detect the faces in a photo and then match them with the corresponding users in your database. This can save a lot of time and effort for both users and administrators.

Another great feature of the Google Cloud Vision API is its ability to detect and extract text from images. This can be useful for a wide range of applications, such as optical character recognition (OCR) for scanned documents, or automatic caption generation for videos. The API can also perform language detection and provide translations, making it easy to work with text in multiple languages.

Google Cloud Vision API Android with Kotlin Example

import com.google.api.services.vision.v1.model.FaceAnnotation

import com.google.api.services.vision.v1.model.BatchAnnotateImagesResponse

val vision = Vision.getVisionService()

val imgBytes = File("path/to/image.jpg").readBytes()

val img = Image().encodeContent(imgBytes)

val features = listOf(Feature().setType("FACE_DETECTION"))

val requests = listOf(AnnotateImageRequest().setImage(img).setFeatures(features))

val request = BatchAnnotateImagesRequest().setRequests(requests)

val response = vision.images().annotate(request).execute()

val faceAnnotations = response.responses[0].faceAnnotations

for (faceAnnotation in faceAnnotations) {

println("Joy: ${faceAnnotation.joyLikelihood}")

println("Anger: ${faceAnnotation.angerLikelihood}")

println("Sorrow: ${faceAnnotation.sorrowLikelihood}")

println("Surprise: ${faceAnnotation.surpriseLikelihood}")

}

JAVA code for GoogleAI face detect

import com.google.api.client.googleapis.auth.oauth2.GoogleCredential;

import com.google.api.client.googleapis.javanet.GoogleNetHttpTransport;

import com.google.api.client.json.JsonFactory;

import com.google.api.client.json.jackson2.JacksonFactory;

import com.google.api.services.vision.v1.Vision;

import com.google.api.services.vision.v1.VisionScopes;

import com.google.api.services.vision.v1.model.AnnotateImageRequest;

import com.google.api.services.vision.v1.model.AnnotateImageResponse;

import com.google.api.services.vision.v1.model.BatchAnnotateImagesRequest;

import com.google.api.services.vision.v1.model.BatchAnnotateImagesResponse;

import com.google.api.services.vision.v1.model.FaceAnnotation;

import com.google.api.services.vision.v1.model.Feature;

import com.google.api.services.vision.v1.model.Image;

import com.google.common.collect.ImmutableList;

import java.io.IOException;

import java.nio.file.Files;

import java.nio.file.Path;

import java.nio.file.Paths;

import java.security.GeneralSecurityException;

import java.util.List;

public class FaceDetection {

public static void main(String[] args) throws IOException, GeneralSecurityException {

// Load the credentials from the JSON file

GoogleCredential credential = GoogleCredential

.fromStream(FaceDetection.class.getResourceAsStream("/credentials.json"))

.createScoped(VisionScopes.all());

// Create a new Vision client

JsonFactory jsonFactory = JacksonFactory.getDefaultInstance();

Vision vision = new Vision.Builder(GoogleNetHttpTransport.newTrustedTransport(), jsonFactory, credential)

.setApplicationName("Google-VisionAPI-Quickstart")

.build();

// Read the image file into a byte array

Path path = Paths.get("path/to/image.jpg");

byte[] imageBytes = Files.readAllBytes(path);

// Create the image request

Image image = new Image().encodeContent(imageBytes);

Feature feature = new Feature().setType("FACE_DETECTION");

AnnotateImageRequest request = new AnnotateImageRequest().setImage(image).setFeatures(ImmutableList.of(feature));

// Perform the request

Vision.Images.Annotate annotate = vision.images().annotate(new BatchAnnotateImagesRequest().setRequests(ImmutableList.of(request)));

BatchAnnotateImagesResponse batchResponse = annotate.execute();

AnnotateImageResponse response = batchResponse.getResponses().get(0);

// Extract the face annotations

List faceAnnotations = response.getFaceAnnotations();

for (FaceAnnotation faceAnnotation : faceAnnotations

The Google Cloud Vision API is also able to detect objects and landmarks within an image, which can be useful for image search, or for creating image-based tours.

The API is easy to use and is available in multiple programming languages including Java, Python, Go, and Node.js. You can use it in your application by making a simple API call to the Google Cloud Vision API, passing in an image or video along with the types of analysis you want to perform.

Overall, the Google Cloud Vision API is a powerful and versatile tool that can help developers to easily add advanced image and video analysis capabilities to their applications. With the ability to detect faces, extract text, detect objects, and more, the Google Cloud Vision API can help you to build innovative and engaging applications that are tailored to your users’ needs.

Comments

-

Insertcart Custom WooCommerce Checkbox Ultimate

Original price was: $ 39.00.$ 19.00Current price is: $ 19.00. -

Android App for Your Website

Original price was: $ 49.00.$ 35.00Current price is: $ 35.00. -

Abnomize Pro

Original price was: $ 30.00.$ 24.00Current price is: $ 24.00. -

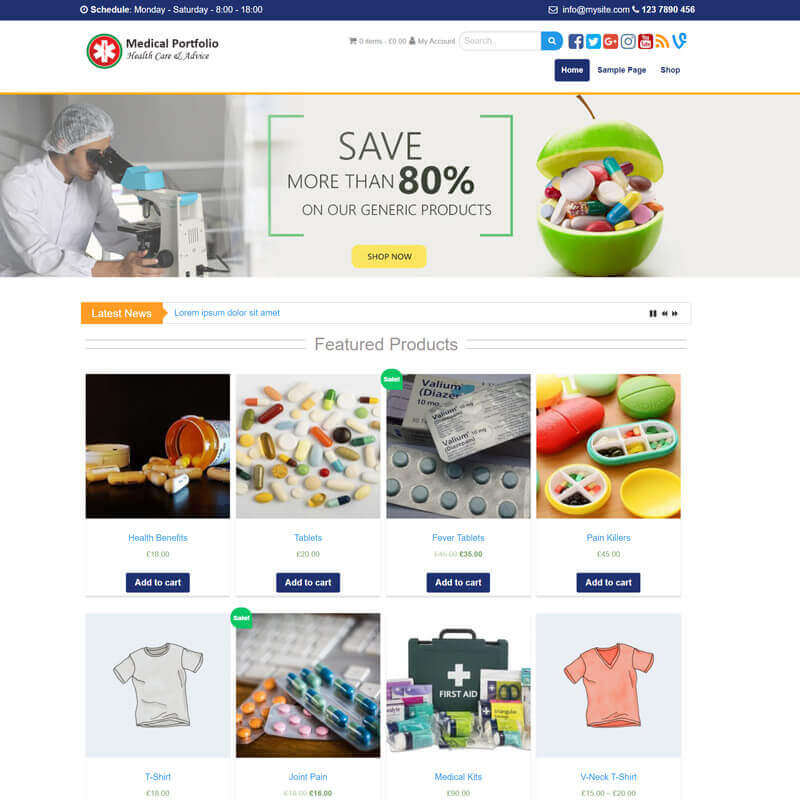

Medical Portfolio Pro

Original price was: $ 31.00.$ 24.00Current price is: $ 24.00. -

Your Website Set Up + Boost

Original price was: $ 49.00.$ 41.00Current price is: $ 41.00.

Latest Posts

- How to Use AWS SES Email from Localhost or Website: Complete Configuration in PHP

- How to Upload Images and PDFs in Android Apps Using Retrofit

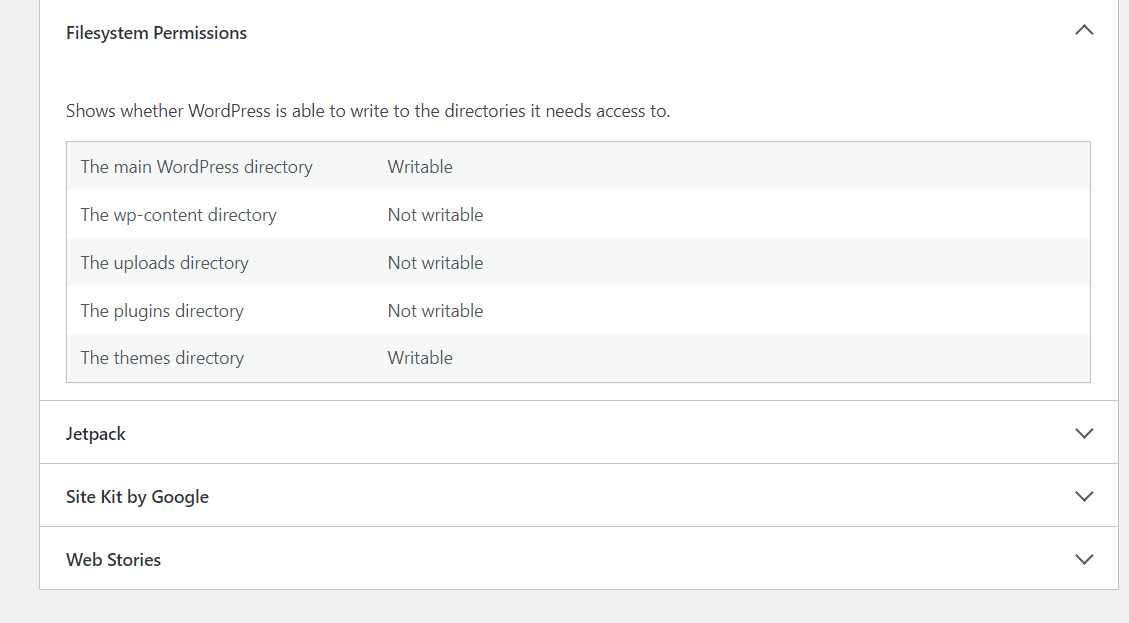

- [Fix] File Permission Issue in Apache Website WordPress Not Writable to 775

- Best PHP ini Settings for WordPress & WooCommerce: Official Recommendations

- Script to Get Users System or Browser Data When Link Open Grab Using PHP & JavaScript

Leave a Reply